Research Fellowship

The Pivotal Research Fellowship is a 9-week program for promising researchers to produce impactful research and accelerate their careers in AI safety and AI governance. Fellows conduct research, work with experienced mentors, participate in workshops & private Q&A sessions with domain experts, and build strong networks within the AI safety research community in London and beyond.

The 26.Q1 Research Fellowship runs from February 9th to April 10th. Apply until Sun. November 30th, 2025.

Fellows receive:

Direct mentorship from established researchers (see mentors) and research management support

In-person research space in London at the London Initiative for Safe AI alongside leading AI Safety researchers

£6,000 to £8,000 stipend, plus meals and support for travel, accommodation, and compute

This is our 7th research fellowship, building on a strong track record of supporting researchers in tackling important questions about the safety and governance of emerging technology.

Past fellows went on to

work at organisations like GovAI, SaferAI, UK AISI, IAPS, AI Futures Project, Timaeus, Beneficial AI Foundation, Cooperative AI Foundation, Google DeepMind, FLI, and Institute for Progress

start PhDs at the University of Oxford and Stanford University

found organisations such as PRISM Evals and Catalyze Impact

In our most recent cohort, 70% of fellows who submitted extension applications received funding to continue their research for an average of 4 months after the program concluded, and they continued to receive guidance from their mentor and research manager.

Dates & Locations

February 9th to April 10th 2026, at LISA

Eligibility

Anyone committed to ensuring AI develops safely

Financial Support

£6,000 - £8,000 stipend plus travel, housing, meals & compute

Recommend someone who may be a good fit. Receive $500 for each candidate accepted through your referral.

Research Mentors

Our mentors are leading researchers who guide fellows through high-impact projects. In a weekly meeting, they provide hands-on methodological expertise, critical feedback, and strategic direction to help you produce research that addresses the most pressing challenges in AI safety.

Mentors working at:

Support Details

Fellowship Extensions

We offer extensions of up to 6 months for projects on a good trajectory.

The extension phase is flexible, but typically includes: funding for full-time research, continued work with your mentor and research manager, and access to LISA or another AI safety co-working space.

Fellows & Senior Fellows

We offer fellowship spots as Fellows and as Senior Fellows. Senior Fellows have significant experience or a strong track record in relevant fields (such as a PhD, industry experience, or strong publications). Fellows receive a stipend of £6,000 and Senior Fellows receive £8,000.

Travel, Housing, Co-Working & Compute

In addition to the stipend, we provide:

Travel support to & from London

£2,000 accommodation support for fellows not living in London

Full LISA membership, incl. lunch and dinner weekdays

£2,500 compute costs (can be increased for compute-heavy projects)

Testimonials

FAQs

Application

-

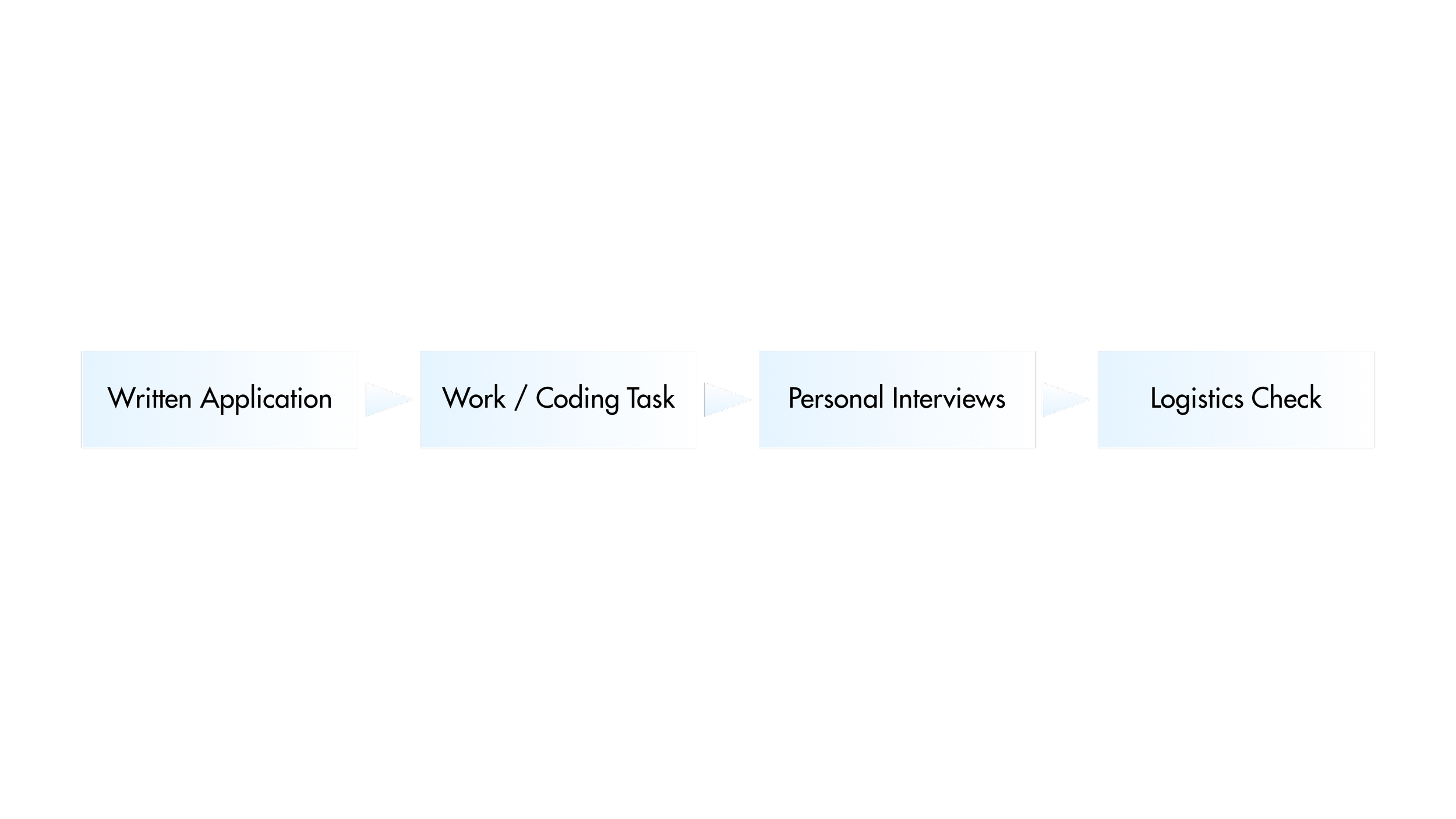

The default is 3 stages:

Written Application

Short work task

Personal, interactive interviews

There might be multiple personal interviews and work tasks when applying to multiple mentors.

-

Anyone who is at least 18 years old at the start of the fellowship is eligible to apply.

-

Everyone is required to apply to at least one mentor. Our mentors typically have project ideas or research areas they are interested in exploring. While there may be some flexibility to incorporate your own project idea, this depends on the mentor and their priorities.

There’s also the option to propose your own research project. This option is likely more selective than applying directly to a mentor, but we will accept outstanding applicants and selecting this option will not change how your direct-to-mentor applications are evaluated. We strongly encourage you to deeply explore our current mentors and select those you'd be excited to work with.

-

We realise that many people bring significant experience participating in our fellowship program. To give an option to these applicants, we offer the option to apply as a “Senior Fellow.” Senior Fellows receive an increased stipend and otherwise have a similar fellowship experience as other fellows.

Fellowship

-

Mentorship structures vary: some mentors may work with multiple fellows, while others focus on individual mentorship. The specific arrangement depends on the mentor’s approach and research focus.

We encourage collaboration among fellows, whether in their main fellowship project or in side projects!

-

You will typically work on research papers ranging from 10-20 pages. However, alternative formats such as blog posts, forum posts, or audiovisual work are also welcome.

-

Our Research fellowship is an in-person program designed to maximise collaboration and mentorship by bringing talented researchers together in London.

We consider remote arrangements only for exceptional candidates with demonstrated independent research capabilities.

If you cannot relocate to London for the fellowship period, please still apply, but note this limitation in your application.

-

Fellows receive a stipend, plus financial support for travel to and from London. Those who don’t already live in London will also receive £2,000 for accommodation.

-

The fellowship is a full-time opportunity. We only make very rare exceptions for extraordinary candidates. Minor other commitments are often no problem and can be flagged in the application form.

-

While meeting all relevant entry requirements is each fellow's responsibility, we provide an information document on entry requirements for the Research Fellowship – accuracy and completeness are not guaranteed.

We may be able to offer assistance during the process, depending on the circumstances.

About Pivotal

-

Pivotal is committed to working on global catastrophic risks, which we consider to be among the most important and neglected global challenges. We believe that building a strong field of researchers and policymakers who are dedicated to reducing these risks is important. Our primary focus at the moment is on delivering a high-quality research fellowship to cultivate talent in the field.

-

Global catastrophic risks (GCRs) are events or situations that pose a risk of major harm on a global scale, with the potential to impact millions or even billions of people.

-

We’re always excited about hearing from people who want to work with us in some form. You can find out at “Work With Us.”

-

For all other questions, please reach out!